Crawl using the SharePoint Optimized V2 connector

Decide what you need to crawl

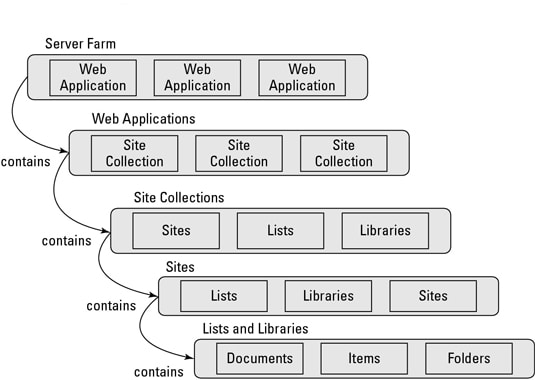

First understand the different types of objects in SharePoint.

You have to choose what you want to crawl.

Crawl all site collections

| This option is only available if you crawl with administrative permissions as listing all site collections in the web application requires administrative access. |

In the Fusion UI, specify the Web Application URL to your SharePoint web application and check Fetch all site collections.

This will now list all site collections and crawl them one at a time.

Crawl a subset of site collections

Do not check the Fetch all site collections checkbox and list each site collection you wish to crawl.

Specify each site collection relative to the Web Application URL.

Crawl a subset of sites, lists, or list items (URLs)

You can also limit your crawl to only crawl specific sites, lists, folders, files, or list items.

You will specify a single site collection in the Site Collection List as in the previous example.

Then you can Restrict to specific SharePoint items. Copy and paste the URL of the resource from the browser into this list of items.

When crawling, the connector will convert the URLs specified into inclusive regular expressions.

Decide how you would like to authenticate

With SharePoint, there are multiple options when it comes to authenticating.

On-premise - NTLM

Specify using the NTLM Authentication Settings in the Fusion UI.

SharePoint Online authentication options

When using the SharePoint Online connector, several options exist for authenticating.

SharePoint service account

This option is the same as logging in a normal user’s account.

If this user is not a SharePoint administrator, you will need to give this user access to each site collection you want to crawl individually.

Enter in the UI:

-

Username

-

Password

-

Tenant

App-only authentication - Azure AD with private key

This method of authentication is the most commonly used. It requires giving the application key Full Control permission, otherwise you get security authorization errors while crawling.

Enter in the UI:

-

Client ID

-

PFX key (in base 64 format)

-

PFX key password

-

Tenant

App-only authentication - Azure AD with OAuth

This method of authentication is the most commonly used. It requires giving the application key Full Control permission, otherwise you get security authorization errors while crawling.

Enter in the UI:

-

Client ID

-

Client Secret

-

Tenant

Understanding incremental crawls

After you have performed your first successful full crawl (no blocking errors), all subsequent crawls are incremental crawls.

Incremental crawls use SharePoint’s Changes API to do the following:

-

For each site collection, use the change token (timestamp) to get all changes since the full crawl was started:

-

Additions

-

Updates

-

Deletions

-

If you are crawling with Fetch all site collections checked, the incremental crawl also detects when a site collection itself was deleted and removes it from your index.

| During incremental crawl, all fields with the lw prefix must remain in the content documents. Failure to do so results in incremental crawls not working as expected. If you are going to filter on fields, leave the lw fields as-is. |

Force full crawl

To perform incremental crawls, the Force Full Crawl property must be disabled, which it is by default

If a crawl starts, and Force Full Crawl is enabled, incremental crawl behavior is skipped and instead a full crawl is performed:

-

MapDb tables are recreated and previously persisted data is deleted.

-

The plugin performs the requests of all SharePoint objects just like the first crawl.

-

All sharepoint-items are reindexed.

-

Given incremental crawl is skipped, changes with

deleteare not detected and documents deleted from the SharePoint server are not removed from the index, resulting in orphaned documents.

Be aware for SharePoint Optimized V2 connector <v2.0.0:

-

After a first crawl, MapDb information is persisted in PVC if configured, otherwise it is saved in the

/tmppath. -

If PVC is configured in the environment and you need to perform full-crawls from scratch:

-

Clear the datasource as it also removes MapDb.

-

-

If PVC is not configured and it is needed to perform full crawls from scratch:

-

Clearing the datasource does not remove the MapDb saved in the

/tmppath from a previous crawl. -

Enable the Force Full Crawl property, as this forces MapDb files to be removed.

-

Since SharePoint Optimized V2 connector v2.0.0, to perform full-crawls from scratch: * Clear the datasource and enable the full-crawl property. * It does not matter if PVC is configured or not, as MapDb information is not persisted by the plugin.

Crawl or incremental crawl and MapDb

At crawl time, MapDb is used to persist:

-

SharePoint objects metadata (sites, lists, items, and attachments). The plugin reads the metadata saved and builds the documents to send to the connector server.

-

siteCollections checkpoints.

-

Permissions inheritance (list of all SharePoint objects and permissions associated).

-

Site-collection taxonomy terms (if property is enabled).

-

List of siteCollections.

-

Folders fetched.

| Since SharePoint Optimized V2 connector v1.5.0, SharePoint objects metadata is not persisted as the metadata is removed when the crawl finishes. |

When the incremental crawl is performed, SharePoint Optimized V2 connector <v2.0.0 uses the persisted information saved in previous crawls:

-

siteCollections checkpoints to know where to start a subsequent crawl (incremental crawl).

-

Information related to permissions inheritance to know which permissions and documents to update.

-

Information related to the list of siteCollections to perform siteCollection deletion.

-

Information related to the list of folders to update folders/nested folders.

-

Taxonomy terms to perform incremental crawl of this feature.

When mapDb is persisted between crawls, this information needs to be deleted to perform a full recrawl.

| Since SharePoint Optimized V2 connector v2.0.0, the plugin does not need to persist the information mentioned above: site collection checkpoints, list of site collections, folders, or permissions. Only taxonomy terms requires mapDb usage and is needed only at crawl time. |